- #Data pipelines with apache airflow for free#

- #Data pipelines with apache airflow how to#

- #Data pipelines with apache airflow software#

- #Data pipelines with apache airflow code#

With ADF, you also get the ability to use its rich graphical interface to monitor your data flows and use automation tools for routine tasks. Azure Data Factory transforms your data using native compute services such as Azure HDInsight Hadoop, Azure Databricks, and Azure SQL Database, which can then be pushed to data stores such as Azure Synapse Analytics for business intelligence (BI) applications to consume. Using Azure Data Factory (ADF), your business can create and schedule data-driven workflows (called pipelines) and complex ETL processes. It enables organizations to ingest, prepare, and transform their data from different sources- be it on-premise or cloud data stores. Microsoft Azure Data Factory is a fully managed cloud service within Microsoft Azure to build ETL pipelines. What is Azure Data Factory (ADF)? Image Source: Data Scientest Robust Integrations: Airflow can readily integrate with your commonly used services like Google Cloud Platform, Amazon Web Services, Microsoft Azure, and many other third-party services.Scalable: Airflow has a modular architecture and uses a message queue to orchestrate an arbitrary number of workers.To parameterize your scripts Jinja templating engine is used. Elegant: Airflow pipelines are simple and to the point.Extensible: You can easily define your own operators and extend libraries to fit the level of abstraction that works best for your environment.

#Data pipelines with apache airflow code#

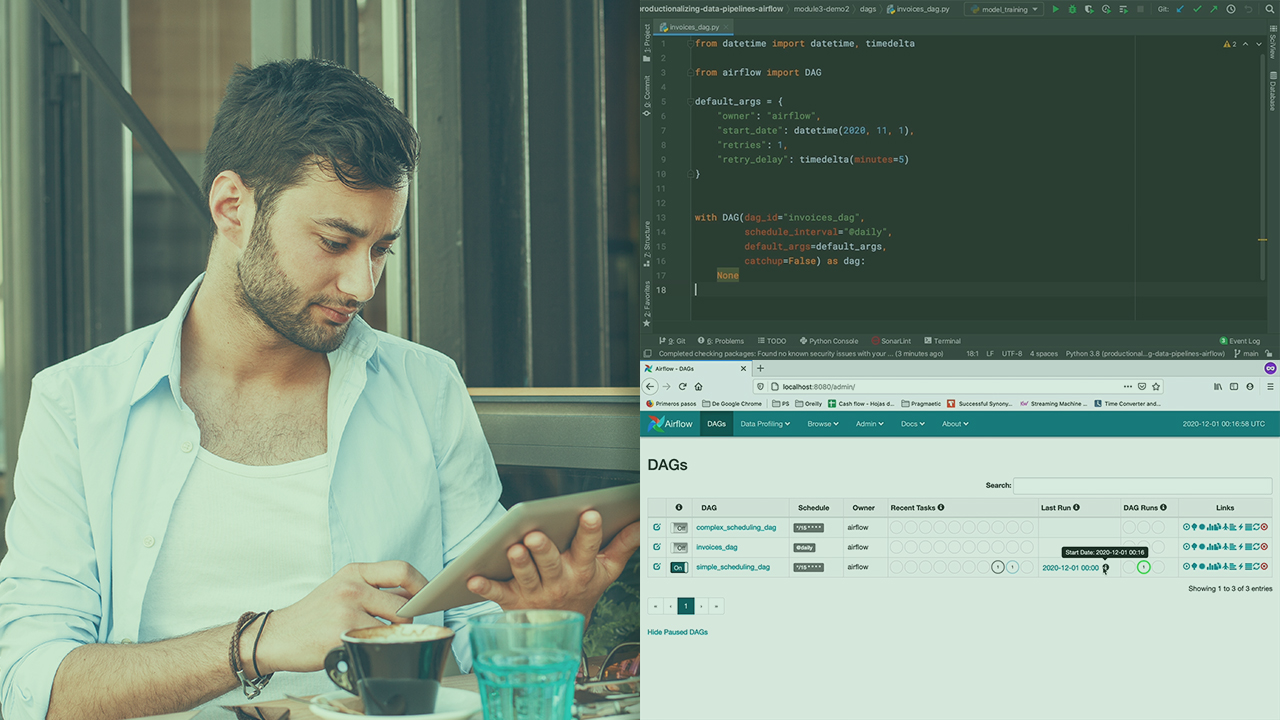

This allows for the development of code that dynamically instantiates with your data pipelines. Dynamic: Airflow pipelines are defined in Python and can be used to generate dynamic pipelines.

#Data pipelines with apache airflow for free#

#Data pipelines with apache airflow how to#

Easy to Use: If you are already familiar with standard Python scripts, you know how to use Apache Airflow.How to Schedule Tasks & DAG Runs with Airflow Scheduler.Airflow Tasks: The Ultimate Guide for 2022.Here are some informative blogs on Apache Airflow features and use cases: Since 2016, when Airflow joined Apache’s Incubator Project, more than 200 companies have benefitted from Airflow, which includes names like Airbnb, Yahoo, PayPal, Intel, Stripe, and many more. It was written in Python and uses Python scripts to manage workflow orchestration. The current so-called Apache Airflow is a revamp of the original project “Airflow” which started in 2014 to manage Airbnb’s complex workflows. Track the state of jobs and recover from failure.Safeguard jobs placement based on dependencies.Comprising a systemic workflow engine, Apache Airflow can: It is used to programmatically author, schedule, and monitor your existing tasks. Not only do they coordinate your actions, but also the way you manage them.Īpache Airflow is one such Open-Source Workflow Management tool to improve the way you work. Workflow Management Tools help you solve those concerns by organizing your workflows, campaigns, projects, and tasks. It’s essential to keep track of activities and not get haywire in the sea of tasks. When working with large teams or big projects, you would have recognized the importance of Workflow Management.

#Data pipelines with apache airflow software#

What is Airflow? Image Source: Apache Software Foundation

0 kommentar(er)

0 kommentar(er)